Buffer temporarily holds data during transmission between devices, while cache stores frequently accessed data for quick retrieval by the system.

Did you know that the performance of computer systems can be greatly influenced by the utilization of buffer and cache? These two components play a crucial role in managing data and improving overall system efficiency. Let’s dive into the world of buffer and cache to understand their differences and how they impact computer systems.

Key Takeaways:

- A buffer is a temporary storage area used for input/output processes, while cache is a high-speed memory component that stores frequently accessed data.

- Buffers are primarily used for disk I/O operations, while cache is primarily used for file reading.

- Buffers store the original copy of data and are implemented in the computer’s main memory, while cache stores copies of frequently accessed data and is inserted between the CPU and the main memory.

- Cache improves overall system performance by reducing memory access time, while buffers compensate for speed differences between processes during data transfers.

- Understanding the differences between buffer and cache can help optimize the utilization of these components in computer systems.

Understanding Buffers

In computer systems, buffers are vital for smooth data transfer and processing. Essentially, buffers are temporary storage spaces in the main memory that hold data during input and output operations, bridging speed gaps between processes. They’re especially useful in input/output tasks, where they let the CPU continue other tasks while handling data transfer in the background, ensuring system efficiency and avoiding slowdowns.

Buffers ensure data integrity by storing the original data copy during transfers. Typically, they’re allocated in the main memory (RAM), often using dynamic RAM, to provide enough space for temporary data storage. Understanding buffers’ role, performance, and implementation is crucial for optimizing their usage in computer systems, leading to enhanced data transfer and overall system efficiency.

Buffer Utilization in Computer Systems

Buffers play a vital role in various aspects of computer systems, including:

- Network communication: Buffers are used to store incoming and outgoing data during network transmission, ensuring reliable and efficient data transfer.

- File I/O operations: When reading from or writing to files, buffers are utilized to temporarily store data, minimizing disk access and improving overall performance.

- Database operations: Buffers are commonly employed in database systems to cache frequently accessed data, reducing the need for costly disk reads and accelerating data retrieval.

- Audio and video streaming: Buffers help in providing a seamless streaming experience by storing and buffering chunks of audio and video data to overcome network latency and ensure continuous playback.

By effectively utilizing buffers in these scenarios, computer systems can optimize performance, enhance data integrity, and provide a smoother user experience.

| Buffer Performance | Buffer Utilization |

|---|---|

| Buffers help in compensating for speed differences between processes that exchange or utilize data, reducing bottlenecks and improving system performance. | Buffer utilization depends on the specific operation and the volume of data being transferred. It aims to maintain data integrity and prevent congestion during input/output processes. |

| By efficiently managing buffers, system performance can be optimized, ensuring smooth and uninterrupted data transfer. | Buffer utilization is a critical factor in achieving optimal system performance. Proper buffer management and sizing are essential to prevent underutilization or overflow situations that could degrade performance. |

| Buffer performance can be measured based on factors such as response time, throughput, and error rates. | Buffer utilization is often calculated as a percentage of the buffer capacity used during a specific operation. |

Understanding buffer performance and utilization is crucial for developers, system administrators, and anyone involved in designing or optimizing computer systems. By leveraging buffers effectively, system performance can be maximized, resulting in better user experiences and efficient data processing.

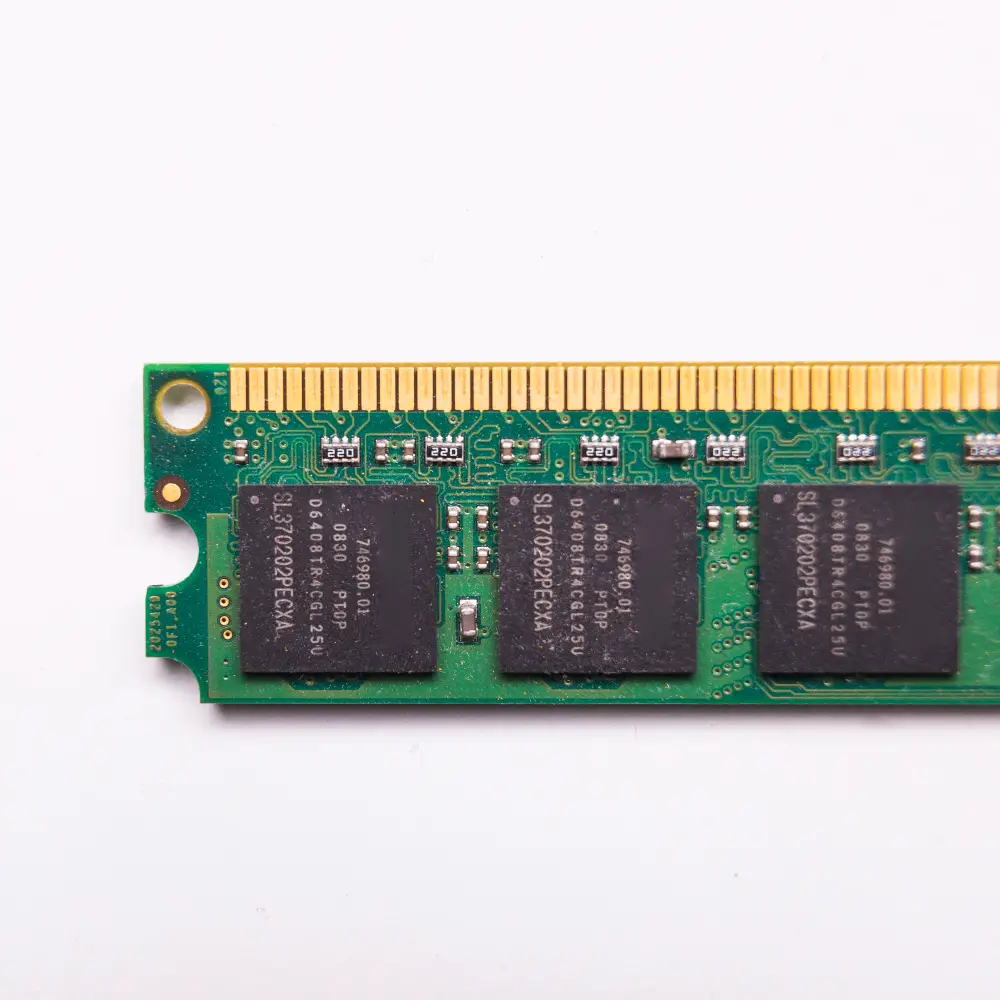

Understanding Cache

Cache memory, positioned between the CPU and the main memory, is crucial for computer systems as it swiftly retrieves frequently accessed data, programs, and instructions, thereby reducing memory access time and enhancing overall performance. This high-speed storage area, made of faster static RAM (SRAM), is usually located on the processor chip, ensuring quick access for the CPU and minimizing delays associated with retrieving data from the slower main memory.

Cache memory operates in multiple levels, such as L1, L2, and L3 caches, each with varying capacities and speeds. While L1 cache is the fastest but has limited capacity, L3 cache offers more storage but with slower access times. Together, these cache levels optimize data access efficiency, contributing significantly to the seamless operation and performance enhancement of computer systems.

To better understand the components of cache memory and its performance, let’s take a closer look at the different cache types and their characteristics:

Cache Types

Cache memory can be categorized into different types based on its level and purpose within the computer system. Here are the common cache types:

| Cache Type | Description |

|---|---|

| L1 Cache | Also known as Primary Cache, L1 cache is the fastest cache level located within the processor chip. It stores frequently accessed data for rapid retrieval by the CPU. |

| L2 Cache | Found between the main memory and the CPU, L2 cache serves as a secondary cache. It has a larger capacity compared to L1 cache, supporting faster data access. |

| L3 Cache | Some computer systems feature an additional cache level, known as L3 cache. It has a higher capacity than L2 cache, offering further performance enhancement in systems that utilize L2 cache. |

A comprehensive understanding of cache types and their specific characteristics is essential for optimizing cache utilization and improving overall system performance.

Differences between Buffer and Cache

While both buffers and caches play crucial roles in computer systems, there are distinct differences between them in terms of functionality and data storage.

Buffer

A buffer is primarily utilized for input/output (I/O) processes, serving as a temporary storage area for data during transfer. It temporarily holds the original copy of the data and is implemented in the computer’s main memory (RAM).

Cache

On the other hand, cache is specifically designed to store frequently accessed data, resulting in faster retrieval and overall system performance improvements. Cache memory utilizes different levels and types of cache, such as L1, L2, and L3 caches, to enhance CPU access time. It is often implemented using faster static RAM (SRAM) instead of the main memory.

Therefore, the main distinctions between buffer and cache can be summarized as follows:

- A buffer is used for temporary storage during I/O processes, while cache stores frequently accessed data.

- Buffers store the original copy of data, while cache contains copies of frequently accessed data.

- Buffers are implemented in the computer’s main memory (RAM), while cache memory is inserted between the CPU and the main memory.

- Cache memory utilizes different levels and types to improve CPU access time, while buffers do not directly affect CPU performance.

Buffer Characteristics

Buffers have specific characteristics that distinguish them from other memory components. A buffer acts as a normal-speed storage area for temporary data storage. It is implemented in the computer’s main memory (RAM) and uses dynamic RAM for its operation. Buffers are used for input/output processes and do not increase CPU access time. They store the original copy of data and are allocated in RAM.

Cache Characteristics

Cache memory is a crucial component with distinctive characteristics that set it apart from other memory elements. It serves as a high-speed storage area tailored for frequently accessed data, significantly enhancing system performance. Let’s delve into the cache qualities that make it an invaluable asset in computer systems:

1. Storage Area

The cache memory, constructed using faster static RAM (SRAM), is strategically inserted between the CPU and the main memory. This placement ensures rapid data retrieval, reducing overall memory access time and enhancing CPU efficiency.

2. Copying of Original Data

Cache memory operates by storing copies of frequently accessed data. By keeping this data readily available, the cache significantly improves the CPU’s ability to swiftly retrieve and execute instructions or process data, leading to faster overall system performance.

3. Different Levels and Types

Cache memory is available in various levels and types, including L1, L2, and L3 cache. Each level has distinct capacities and speeds, tailored to the specific requirements of different computer systems. This tiered structure allows for efficient data storage and retrieval, further optimizing CPU access time and overall system performance.

To showcase the different levels and types of cache memory, here is a comprehensive table:

| Cache Level | Location | Capacity | Speed |

|---|---|---|---|

| L1 Cache (Primary Cache) | Located on the processor chip | Smaller capacity (few KBs to few MBs) | Extremely fast access time |

| L2 Cache (Secondary Cache) | Located between the main memory and L1 cache | Larger capacity (few MBs to tens of MBs) | Slower than L1 cache, but faster than main memory access time |

| L3 Cache | Found in some systems using L2 cache | Higher capacity (tens of MBs to several GBs) | Slower than L1 and L2 cache, but faster than main memory access time |

This comprehensive table presents clear distinctions between different levels of cache memory, including their location, capacity, and access speed, providing valuable insights into the cache hierarchy.

With its specific characteristics, cache memory plays a pivotal role in optimizing system performance by improving CPU access time and efficiently storing frequently accessed data.

Types of Buffer

When it comes to computer systems, there are different types of buffers that serve various purposes. Let’s take a closer look at some of the most common types:

Single Buffer

A single buffer is a straightforward approach to data transfer between two devices. It stores data temporarily, enabling smooth communication between the devices. This type of buffer can be found in applications like streaming media, where a continuous flow of data is required.

Double Buffer

A double buffer, as the name suggests, utilizes two buffers for data transfer. This approach allows one buffer to process data while the other buffer receives new data simultaneously. Double buffering is commonly used in graphics processing, where it minimizes screen flickering and enhances display performance.

Circular Buffer

A circular buffer, also known as a ring buffer, consists of more than two buffers. It provides an efficient way of improving the data transfer rate by allowing continuous writing and reading operations. Circular buffers are commonly employed in real-time systems, such as audio and video streaming, where a steady stream of data is crucial.

Buffer Swapping

Buffer swapping refers to the process of exchanging the roles of buffers in a data transfer operation. This technique is often used to prevent data loss or ensure uninterrupted communication between devices. Buffer swapping can be implemented in software and hardware systems, depending on the specific requirements and constraints.

Overall, buffers play a vital role in facilitating efficient data transfer and communication within computer systems. By using different types of buffers, system designers can optimize performance and ensure seamless operations.

Types of Cache

Cache memory plays a crucial role in enhancing overall system performance by reducing memory access time. Cache memory can be classified into different types based on its location and purpose:

Primary Cache (Level 1 Cache)

Primary cache, also known as Level 1 (L1) cache, is the fastest and is directly built into the processor chip. It provides quick access to frequently used data and instructions. Given its proximity to the CPU, primary cache ensures minimal latency and enables rapid retrieval of information.

Secondary Cache (Level 2 Cache)

Situated between the main memory and the cache memory, secondary cache, or Level 2 (L2) cache, serves as a bridge. It acts as a larger storage area compared to the primary cache and contains frequently accessed data that doesn’t fit in the primary cache. Level 2 cache helps improve performance by reducing the frequency of memory accesses to the main memory.

Level 3 Cache

Level 3 (L3) cache is utilized in certain systems that employ Level 2 cache. L3 cache has a higher capacity compared to L2 cache and provides additional storage for data that doesn’t fit in the L1 and L2 caches. Its purpose is to further bolster performance by accommodating larger amounts of frequently accessed data.

The distinct levels of cache cater to different speeds and capacities, allowing computer systems to efficiently handle data transfers and retrieval.

| Cache Type | Location | Purpose |

|---|---|---|

| Primary Cache (L1 Cache) | Processor Chip | Rapid retrieval of frequently used data and instructions |

| Secondary Cache (L2 Cache) | Between main memory and cache memory | Storage for frequently accessed data that doesn’t fit in primary cache, reducing memory access frequency |

| Level 3 Cache (L3 Cache) | Dependent on systems with L2 cache | Higher capacity storage for additional frequently accessed data |

Cache vs Buffer Performance

Cache memory and buffers are vital components of computer systems, but they serve different purposes in enhancing performance. Cache memory, by storing frequently accessed data, significantly reduces memory access time, leading to faster data retrieval by the CPU and overall system efficiency. On the contrary, buffers are mainly utilized in input/output processes, where they temporarily hold data to compensate for speed variations between different tasks, allowing the CPU to continue functioning without interruptions.

Buffers act as temporary storage areas for data during transfers between devices, ensuring smooth and uninterrupted data flow. They hold the original copy of the data and facilitate seamless communication between various processes. Cache memory, however, focuses on speeding up data retrieval by storing frequently accessed data and reducing memory access time. By understanding the distinct roles of cache memory and buffers, users can optimize their computer systems for efficient performance and smooth data processing.

Main Differences Recap

To summarize, there are distinct differences between buffers and cache in terms of their primary functions and data storage characteristics. Here’s a recap of these differences:

Functions:

- Buffers are primarily used for input/output processes, temporarily holding data during transfers.

- Cache is designed to store frequently accessed data for faster retrieval.

Data Storage:

- Buffers store the original copy of data.

- Cache stores copies of the original data.

Implementation:

- Buffers are implemented in the main memory.

- Cache is inserted between the CPU and the main memory.

Utilization:

- Cache memory utilizes different levels and types to improve CPU access time and overall system performance.

- Buffers compensate for speed differences between processes, allowing the CPU to continue with other tasks while data is stored and transferred.

Understanding these distinctions between buffers and cache is crucial for optimizing the utilization of these components in computer systems.

Conclusion

Understanding the differences between buffers and cache is crucial for improving computer system performance. While both are essential for storing and retrieving data, they serve distinct purposes.

Buffers are temporary storage areas used during input/output processes, allowing the CPU to continue tasks while data is transferred. They store the original data copy in the computer’s main memory (RAM). In contrast, cache is designed to store frequently accessed data for quick retrieval, reducing memory access time and enhancing system performance. With different cache levels like L1, L2, and L3, cache memory ensures efficient data access, often implemented using faster static RAM (SRAM) on the processor chip. By grasping these differences, users can optimize both buffers and cache to improve system performance and streamline computing experiences.

FAQ

What is the difference between a buffer and cache?

A buffer is a temporary storage area used for input/output processes, holding data while it is waiting to be transferred. On the other hand, a cache is a high-speed memory component that stores frequently accessed data for faster retrieval.

How are buffers and cache utilized in computer systems?

Buffers are commonly used for disk I/O operations and temporary data storage, while cache is primarily used for file reading and improving overall system performance.

What is a buffer’s primary function and where is it implemented?

A buffer’s primary function is to compensate for speed differences between processes that exchange or use data. It is implemented in the computer’s main memory (RAM).

What is the primary purpose of cache memory and where is it located?

Cache memory’s primary purpose is to reduce memory access time and increase overall computer speed. It is inserted between the CPU and the main memory.

How do buffers and cache differ in terms of data storage?

Buffers store the original copy of data and are implemented in the computer’s main memory, while cache stores copies of frequently accessed data and is implemented using faster static RAM.

What are the different types of buffers found in computer systems?

There are different types of buffers, including single buffer, double buffer, and circular buffer, each with varying capabilities to improve data transfer rate.

Are there different types of cache memory?

Yes, cache memory can be categorized into different levels and types, including primary cache (L1), secondary cache (L2), and sometimes, Level 3 (L3) cache, depending on the system.

How do buffer and cache performance differ?

Cache memory improves system performance by reducing memory access time and storing frequently accessed data for quicker retrieval. Buffers, on the other hand, are utilized for input/output processes and temporary data storage during transfers.

What are the main differences between a buffer and cache?

The main differences between a buffer and cache lie in their primary functions and data storage characteristics. Buffers are mainly used for temporary data storage during input/output processes, while cache is designed to store frequently accessed data for faster retrieval.

What are the characteristics of a buffer?

Buffers act as normal-speed storage areas for temporary data storage, store the original copy of data, and are implemented in the computer’s main memory using dynamic RAM.

What are the characteristics of cache memory?

Cache memory serves as a high-speed storage area for frequently accessed data, uses faster static RAM, and is inserted between the CPU and the main memory to improve system performance.

Source Links

- https://stackoverflow.com/questions/6345020/what-is-the-difference-between-buffer-and-cache-memory-in-linux

- https://www.tutorialspoint.com/difference-between-cache-and-buffer

- https://www.geeksforgeeks.org/difference-between-buffer-and-cache/

Image Credits

Featured Image By – Freepik

Image 1 By – Freepik

Image 2 By – Freepik